If a page on your site takes more than three clicks from the homepage to find, Google’s web crawlers are unlikely to browse it. However, buried pages can lead to greater issues than a lack of engagement from search engines.

Ranking problems are often blamed on content quality, intent mismatches, or competition, while the underlying issue remains quietly in the site’s structure. Pages can be technically sound, well-written, and relevant, yet still struggle because search engines reach them too late or not often enough.

As sites scale, small structural decisions compound, pushing valuable content farther from the areas crawlers prioritize. Crawl depth explains why those buried pages rarely get a fair chance to compete.

This article will illuminate the impact crawl depth has on websites and how to avoid potential issues in the future.

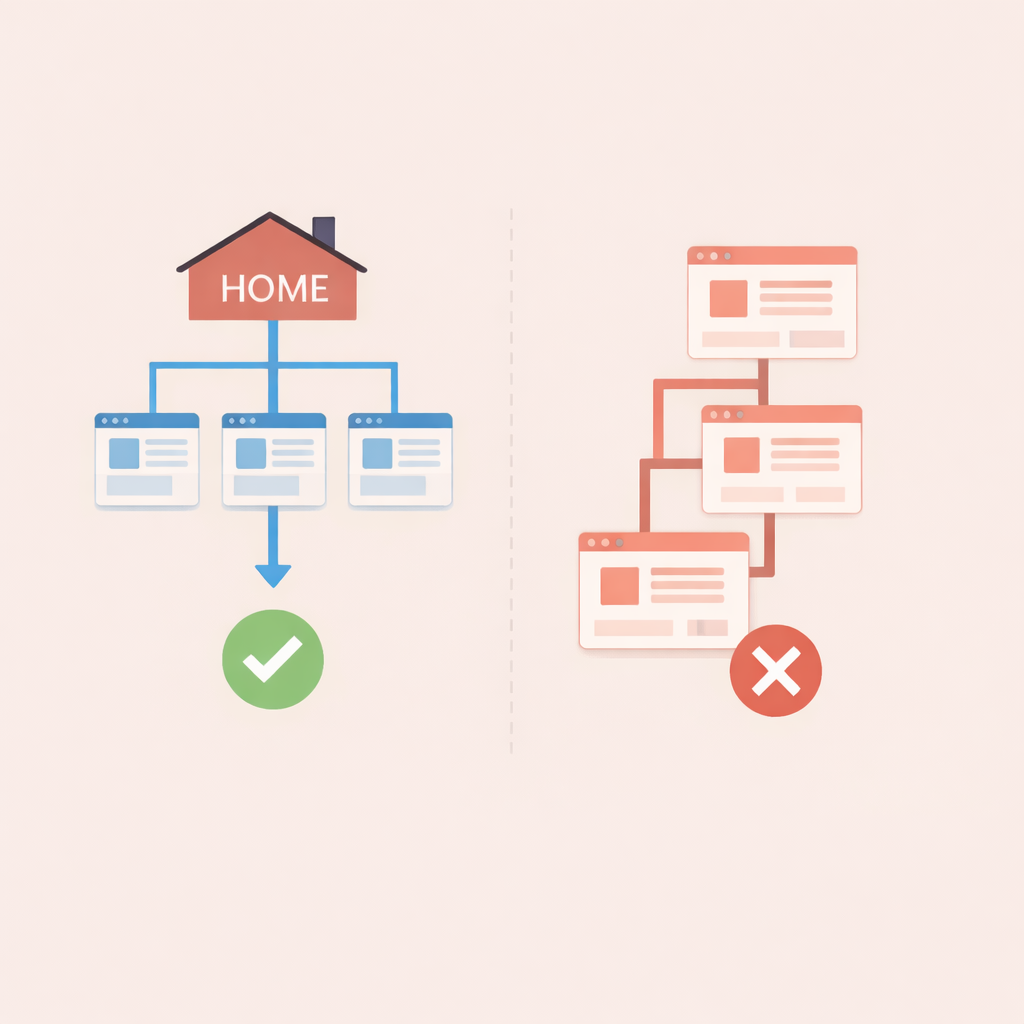

Search engines navigate sites by following links, measuring how many steps it takes to reach each page from the homepage. Pages positioned closer to the site’s core tend to receive more frequent crawls, stronger internal signals, and clearer contextual understanding. When pages are farther away in the structure, signals will weaken, and search engines will revisit them less often.

Over time, buried pages lose priority because crawl resources focus on areas that appear more central and connected. Strong content can still underperform when it lives several layers deep, especially on larger sites where thousands of URLs compete for attention. A well-planned structure keeps important pages within easy reach, helping search engines recognize relevance and maintain consistent visibility.

Indexing depends on how easily search engines can discover, revisit, and reassess a page over time. Pages positioned close to primary navigation tend to enter the index faster and receive more consistent updates because crawlers encounter them early and often.

As pages move deeper into the structure, indexing becomes less reliable, especially on sites with a large number of URLs competing for attention. Depth influences indexing in several practical ways:

When crawl resources get stretched, search engines prioritize pages that appear central and well-connected. Pages buried several layers deep often miss those signals, which leads to delayed indexing or inconsistent visibility even when the content meets quality standards.

If indexing feels inconsistent or slow across large sections of your site, crawl depth is often the hidden constraint. Segment helps teams discover pages that search engines miss and prioritize fixes that improve discovery and index stability.

Search engines rely on internal links to understand which pages deserve consistent attention. Pages buried deep in a site tend to receive fewer internal links, which reduces the signals that communicate importance and relevance. As those signals fade, search engines allocate less crawl effort and revisit those URLs less often.

Visibility declines further as depth increases because context becomes harder to interpret. When a page sits several layers away from core sections, it appears less connected to the site’s main themes. Even well-written content can struggle when it lacks proximity to authoritative pages, leading search engines to favor similar topics that live closer to the surface of a stronger internal structure.

Competition also plays a role as depth increases. When multiple pages target related topics, search engines often prioritize the version that feels more accessible and better supported internally. Deeper pages lose out not because they lack value, but because their position suggests lower priority, weaker endorsement, and limited relevance within the broader site hierarchy.

Pinterest faced crawl depth problems as boards, infinite scroll, and filtered feeds generated massive numbers of URLs with weak internal signals. Many valuable pages sat several layers away from strong entry points, which slowed indexing and reduced visibility. Pinterest improved performance by consolidating URL patterns, strengthening hub pages, and improving internal linking between related boards and topics.

HubSpot resolved crawl depth issues as its blog and resource library scaled. Older content became buried under tags, pagination, and nested category paths. HubSpot flattened internal linking by promoting cornerstone pages, improving cross-links between related articles, and reducing reliance on deep tag archives.

Shopify stores frequently suffer from crawl depth problems when product collections stack categories, pagination, and filters without limits. Large catalogs push individual products several clicks away from the homepage, especially when themes rely heavily on layered navigation. Merchants who improve internal linking from collection pages and limit crawlable filters tend to see faster indexing and stronger product visibility.

Depth issues rarely come from a single mistake. Structural decisions made to manage scale often create crawl barriers unless teams actively control hierarchy, links, and URL behavior as sites grow.

Search engines use internal structure to judge importance, and pages buried deep tend to receive fewer signals that reinforce relevance. Reduced crawl frequency and weaker internal links make those pages easier to overlook over time.

Even strong content can fade when its position suggests lower priority within the site. Let’s take a closer look at some of the most notable factors.

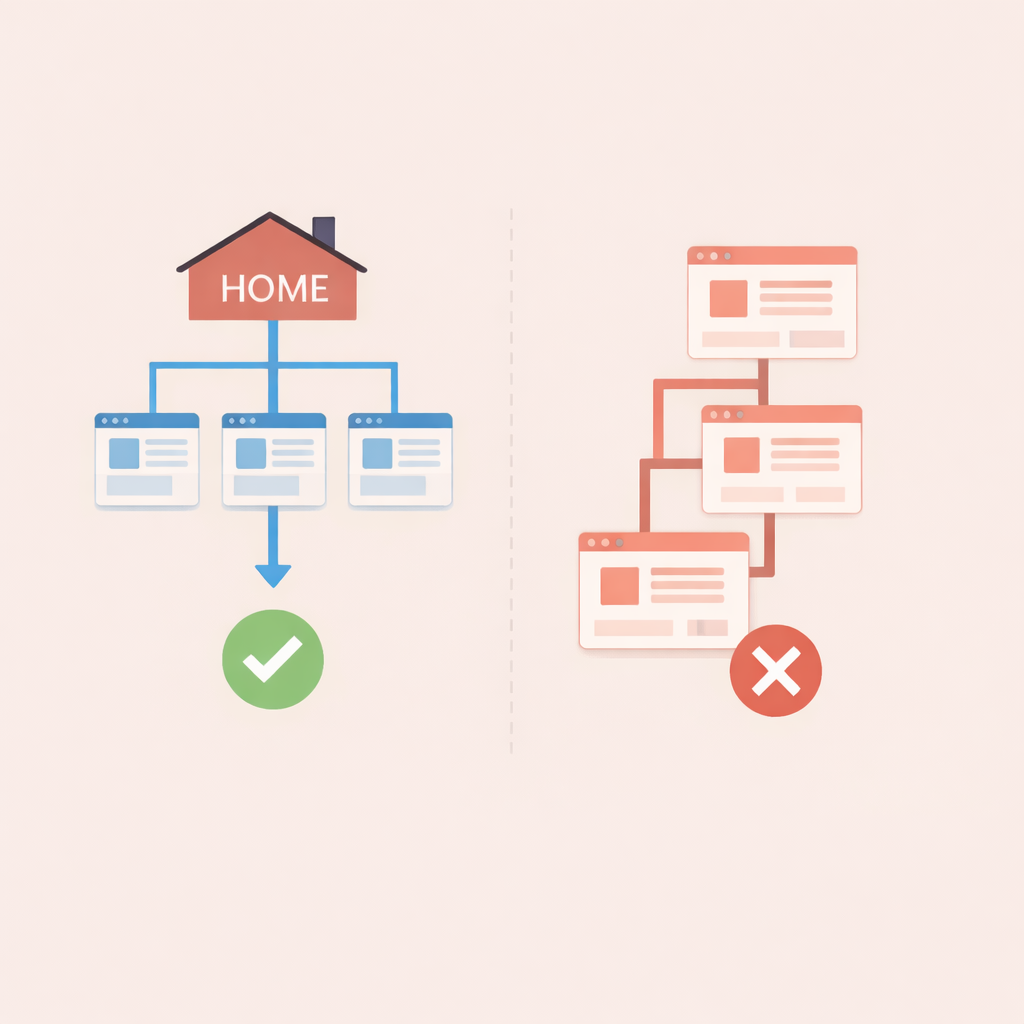

These develop when menus and link paths expand without clear limits. Primary navigation fills up, and pages get nested under multiple layers to keep layouts manageable. Each added layer increases the number of clicks required to reach deeper content, which quietly pushes those pages farther from crawl priority.

Over time, navigation becomes more about accommodating volume than guiding discovery. Important pages end up buried alongside low-priority ones, and search engines struggle to distinguish which URLs matter most. Without intentional pruning or hierarchy control, navigation depth grows faster than visibility can keep up.

Content sprawl often leads teams to add new layers instead of reinforcing existing sections. As topics expand, categories branch into subcategories, which then branch again without evaluating whether the extra depth improves organization. Each additional layer increases the distance between core hubs and individual pages.

Similar themes end up fragmented across parallel paths, weakening internal signals. Search engines must travel longer routes to reach related content, which reduces crawl efficiency and clarity. Without consolidation and regular pruning, structural depth grows through accumulation rather than intention.

Large collections often rely on pagination and filtering to surface content, which can quietly create long chains of URLs. Each filter or page state generates another layer that crawlers must follow, pushing meaningful pages farther from core navigation.

Over time, these paths multiply faster than teams expect, especially on sites with dynamic sorting or filtering. Common patterns that create excessive depth include:

When these chains grow unchecked, search engines spend crawl budget navigating variations instead of reaching priority pages. Depth increases through repetition rather than intent, which makes important content harder to discover and maintain in the index.

Problems with crawl depth usually surface through patterns rather than a single clear error. Structural signals, crawl behavior, and performance trends often reveal when pages sit too far from the core of the site. Clear indicators tend to show up once teams know where to look.

These signals usually appear together rather than in isolation. When multiple indicators align, crawl depth becomes a structural issue rather than a content problem.

Tools commonly used to identify crawl depth issues include:

Using a combination of multiple tools will generally yield the best results.

Pages that require four or more internal clicks from the homepage often fall into excessive depth. Search engines can still reach them, but crawl frequency, signal strength, and prioritization tend to decline as distance increases.

Indexing alone does not guarantee consistent visibility. Pages buried deep often get revisited less frequently, which affects how quickly updates get reflected and how confidently search engines assess relevance over time.

Content quality helps, but structure still plays a major role. Pages positioned far from core sections struggle to compete when similar topics exist closer to the surface with stronger internal support.

Site depth describes overall hierarchy, while crawl depth focuses on how search engines traverse links. A site can appear organized visually while still forcing crawlers through long or inefficient paths.

Reviews should happen whenever content scales, navigation changes, or large sections get added. Ongoing checks prevent small structural decisions from turning into widespread visibility issues.

Site structure determines how often search engines reach important pages, how clearly relevance gets reinforced, and how authority flows across the site. When crawl depth grows unchecked, even strong pages lose consistency as crawlers focus on areas that appear closer to the core.

Clear hierarchies, intentional internal linking, and controlled URL growth help search engines maintain a reliable understanding of what matters most. Over time, managing crawl depth protects visibility by aligning site growth with crawl behavior instead of working against it.

If crawl depth is limiting how search engines discover and prioritize your content, addressing it early prevents structural inefficiencies from compounding as your site grows. Segment helps teams identify excessive depth, surface buried pages, and realign internal linking so important content stays within reach.

Book a call with Segment to uncover where crawl paths break down and how an improved structure can support consistent visibility and long-term search performance.